Story: Photographic Memory

[ The following is a work of fiction, written in July 2024 by a living being. ]

Some people say they have a photographic memory, but how good are your memories?

That was the question asked by Meta a few years ago in 2029. Facing spiraling storage costs, and the expense of building new datacenters, the company took a novel approach to saving on storage space.

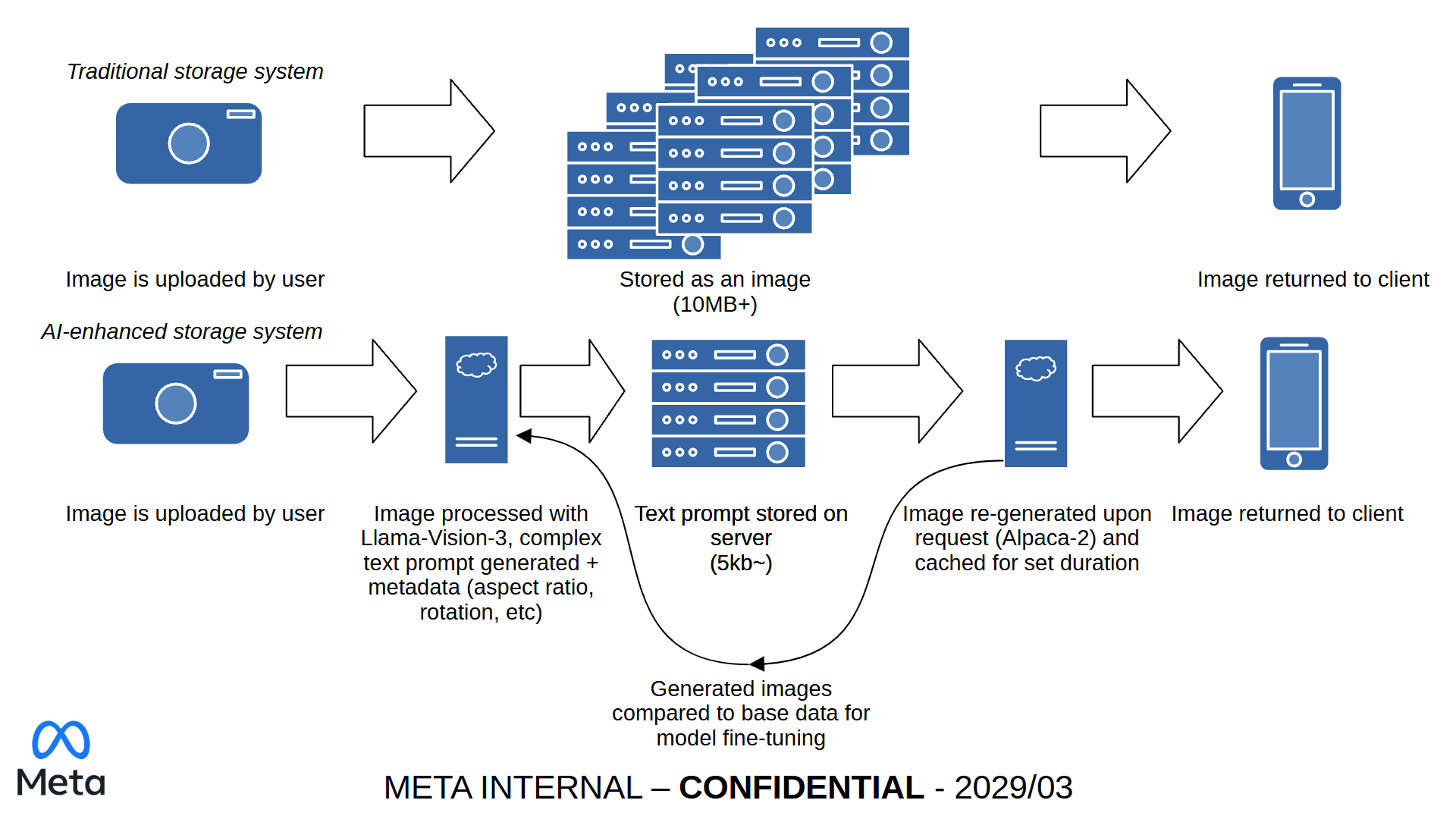

Pictures uploaded that were more than 15 years old would be deleted, but still retrievable later. How you might ask? The company would use a computer vision model (Llama-Vision-3) to analyze a photograph and then turn it into a prompt, allowing the image to later be re-generated by a separate generative image model (Alpaca-2). This meant instead of having to save a large image that may be multiple megabytes in size, Meta could instead save a detailed text prompt, consisting of a kilobyte or less, to recreate the image at a later date.

The generative models were trained on Meta’s large library of photographs uploaded by it’s users throughout the years, using a combination of accessibility text, computer vision models, and outsourced staff to label the images and provide metadata for training purposes. This also meant that every Facebook/Instagram user’s face was learned by the model, allowing it to replicate users more accurately. The model was supposedly re-trained on a monthly basis. This unique approach allowed Meta to save tens of millions of dollars a year on server costs, and allowed them to shut down several datacenters across Europe and North America.

Users quickly began to notice though that, while impressive, the generated images didn’t always line up with their memory. Family members would appear with facial features changed, clothing they never wore, or even a different skin tone entirely. Most infamously was a re-generated photograph of President Harris with Donald Trump as Vice President, the model seemingly having lost context, or influenced from the slew of AI-generated glut of false information from the 2028 US Election. The model would often veer towards the likeness of famous public figures such as celebrities and politicians, theorized due to their prevalence in the dataset Meta had assembled. Various teams within Meta began extensive rounds of safety testing and red teaming, refining the models and gradually reducing hallucinations. However even in it’s final iteration, the model still wasn’t without it’s flaws.

A new form of misuse became prominent called “relayed prompt injection”. While Meta scanned images itself for nudity or illegal content, their systems were not scanning the accessibility text, resulting in some users inputting false information and using the system to generate abusive or sexually explicit images which would then be treated as the original. Misuse ranged from racism, transphobia, to illicit pornographic deepfakes being made of public figures. Eventually Meta would begin parsing accessibility text through a safety model, and go back through the past five months of generated images looking for misuse.

Eventually after two years of protest, backlash, and several controversies, Meta pulled the plug on the project two years later in 2030. Users were upset their photographs had been erased without consent, some of them having no backups and relying on the website to store their data securely. Some people lost holiday albums, baby photos, or the last memories of a long deceased relative. Conspiracy theories were abundant of Meta using the plan to lay-off thousands of workers and cut costs by scrapping old data. Meta faced a €8,000,000 fine from the European Union for misuse of customer data, but would otherwise escape financially unharmed, and in a better position than a few years ago.

Plans to extend the feature to uploaded videos were shelved, and several key members of Meta’s artificial intelligence team were laid off as a result. Meta’s advancements into the field of AI were majorly slowed by these events, forcing the company to take a step back and evaluate the usefulness of such systems. The company still continues to offer several generative services, such as image generation and a music creation platform.